Unveiling the Truth: Does ChatGPT Run on Azure?

Hey there, it's time to uncover the truth about ChatGPT and Azure. As technology continues to advance, organizations are constantly seeking efficient and cost-effective ways to leverage AI capabilities. In this article, we will explore the integration of ChatGPT with Azure services and delve into the advantages and cost savings it brings. So, let's dive in and find out if ChatGPT runs on Azure!

Key Takeaways:

- ChatGPT can indeed run on Azure, thanks to Microsoft's integration efforts.

- The Maia 100 AI Accelerator and Cobalt 100 CPU are custom-designed chips for Azure, enhancing its AI architecture.

- Integration with Azure services simplifies deployment processes and optimizes machine learning model performance.

- Azure's custom silicon brings scalability, end-to-end performance, and cost savings.

- ChatGPT on Azure offers a powerful platform for organizations to harness the potential of AI technology.

- Does ChatGPT run on Azure?

- How can ChatGPT be deployed on Azure?

- What are the advantages of integrating ChatGPT with Azure services?

- How does Azure optimize the performance of machine learning models?

- Is deploying ChatGPT on Azure cost-effective?

- Can ChatGPT and Azure be integrated for general computing workloads?

- What makes Azure's custom silicon advantageous for AI deployment?

Integration with Azure Services and Deployment Options on Azure

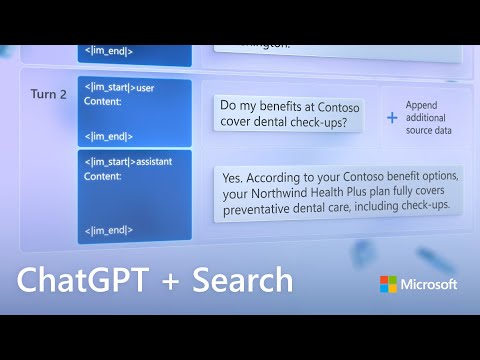

Microsoft's custom-designed chips, the Maia 100 AI Accelerator and the Cobalt 100 CPU, offer seamless integration with a range of Azure services, providing organizations with flexible deployment options for ChatGPT on the Azure platform. One of the key integration points for ChatGPT is with Azure ML's managed online endpoints. This integration simplifies the deployment process by eliminating the need for organizations to manage different frameworks and infrastructure. Instead, they can leverage Azure ML's infrastructure and effortlessly deploy ChatGPT models for production use.

An important component of this integration is the use of the Triton Inference Server in Azure ML. The Triton Inference Server is an open-source software that enables organizations to optimize the performance of their machine learning models. With support for popular frameworks like TensorFlow, ONNX, and PyTorch, the Triton Inference Server ensures compatibility with ChatGPT models. This compatibility allows organizations to achieve high-performance inferencing and maximize the throughput and utilization of their models. It also offers benefits such as safe rollout, security, and monitoring, providing a reliable and efficient deployment environment for ChatGPT on Azure.

See Also... Discovering When ChatGPT Was Launched

Discovering When ChatGPT Was LaunchedDeploying ChatGPT on Azure not only becomes more efficient with these integrated solutions but also offers cost savings for organizations. By leveraging Azure's infrastructure and services, organizations can avoid the upfront costs of building and maintaining their own hardware stack. This allows them to focus resources on developing and fine-tuning their ChatGPT models rather than infrastructure management. Additionally, with Microsoft's custom-designed chips, the Maia 100 AI Accelerator and the Cobalt 100 CPU, organizations can benefit from enhanced performance and scalability. These chips optimize the execution of ChatGPT models, enabling faster model training and inference times, further maximizing the cost savings and efficiency of deploying ChatGPT on Azure.

In summary, the integration of ChatGPT with Azure services and the deployment options available on the Azure platform provide organizations with a powerful solution for leveraging AI capabilities. The seamless integration with Azure ML's managed online endpoints simplifies deployment processes, while the use of the Triton Inference Server ensures high-performance inferencing. The cost savings and performance enhancements provided by Microsoft's custom-designed chips further enhance the value of deploying ChatGPT on Azure. With these integrated solutions, organizations can unlock the full potential of ChatGPT and Azure, driving innovation and realizing the benefits of AI technology.

Advantages and Cost Savings with Azure's Custom Silicon

Microsoft's integration of ChatGPT with Azure services harnesses the power of custom-designed chips, the Maia 100 AI Accelerator, and the Cobalt 100 CPU. This integration provides several advantages and cost savings for organizations seeking to leverage AI capabilities. The Maia 100 AI Accelerator, designed specifically for running large language models, offers enhanced performance and faster model training and inference times. With its impressive 105 billion transistors and custom Ethernet-based network protocols, it delivers scalability and end-to-end performance, making it an ideal choice for organizations with high-demand AI workloads.

See Also... Exploring ChatGPT: Does ChatGPT Have an API?

Exploring ChatGPT: Does ChatGPT Have an API?The Cobalt 100 CPU, on the other hand, optimizes general Azure computing workloads, further enhancing the overall performance of AI services on Azure. By reducing reliance on specialized hardware, Microsoft's custom silicon offers a more cost-effective cloud hardware stack. This innovation aligns with Microsoft's commitment to providing organizations with scalable, efficient, and affordable AI solutions.

Collaborating with OpenAI, Microsoft's development of custom-designed chips for Azure translates into significant cost savings for organizations. The Maia 100 AI Accelerator and the Cobalt 100 CPU optimize Azure's AI architecture, enabling the training of new AI models that are both superior and more cost-efficient than existing alternatives. This groundbreaking combination of performance, cost savings, and compatibility with Azure services positions ChatGPT and Azure as a compelling solution for organizations looking to harness the potential of AI technology.

Table: Advantages of Azure's Custom Silicon

| Advantages | Description |

|---|---|

| Enhanced Performance | The Maia 100 AI Accelerator provides improved performance, enabling faster model training and inference times. |

| Scalability | With 105 billion transistors and custom network protocols, the Maia 100 AI Accelerator offers scalability for high-demand AI workloads. |

| Cost Savings | Microsoft's custom-designed chips offer a more cost-effective cloud hardware stack, reducing reliance on specialized hardware. |

| Compatibility | The integration of custom silicon with Azure services allows seamless compatibility and efficient utilization of AI capabilities. |

By leveraging Azure's powerful integrated solutions, organizations can unlock the full potential of AI with ChatGPT and drive innovation without compromising on performance or budget. The deployment of ChatGPT on Azure becomes efficient, streamlined, and cost-effective, enabling organizations to focus on their machine learning models and achieve valuable business insights.

See Also... Discovering When ChatGPT Was Released: A Revelation

Discovering When ChatGPT Was Released: A RevelationConclusion

In conclusion, the integration of ChatGPT with Azure services reaffirms Microsoft's commitment to delivering cutting-edge AI models across all its offerings. With the introduction of the Maia 100 AI Accelerator and the Cobalt 100 CPU, Microsoft showcases its dedication to custom silicon for Azure, optimizing performance and cost savings for organizations.

These chips bring scalability and end-to-end performance to AI services on the Azure platform, making ChatGPT and Azure an enticing combination for businesses. The inclusion of the Triton Inference Server in Azure ML further streamlines the deployment of ChatGPT, enhancing efficiency and simplicity.

By leveraging Azure's managed online endpoints and monitoring capabilities, organizations can focus on their machine learning models and drive business value without the burden of infrastructure maintenance. ChatGPT on Azure provides a robust platform for AI deployment, unlocking new possibilities for organizations seeking to harness the potential of AI technology.

FAQ

Does ChatGPT run on Azure?

Yes, ChatGPT can be seamlessly integrated with Azure services.

How can ChatGPT be deployed on Azure?

ChatGPT can be deployed on Azure using Azure ML's managed online endpoints.

What are the advantages of integrating ChatGPT with Azure services?

Integrating ChatGPT with Azure services offers benefits such as simplified deployment processes, optimized performance, and cost savings.

How does Azure optimize the performance of machine learning models?

Azure utilizes the Triton Inference Server in Azure ML, which supports frameworks like TensorFlow, ONNX, and PyTorch, to optimize the performance of machine learning models.

Is deploying ChatGPT on Azure cost-effective?

Yes, deploying ChatGPT on Azure with the Maia 100 AI Accelerator and the Cobalt 100 CPU provides cost savings for organizations.

Can ChatGPT and Azure be integrated for general computing workloads?

Yes, the Cobalt 100 CPU brings performance improvements for general Azure computing workloads, enhancing the integration of ChatGPT with Azure.

What makes Azure's custom silicon advantageous for AI deployment?

Azure's custom-designed chips, the Maia 100 AI Accelerator and the Cobalt 100 CPU, offer scalability, end-to-end performance, and compatibility with Azure services, making them ideal for AI deployment.

Source Links

- https://www.toolify.ai/ai-news/accelerate-model-inference-in-azureml-with-triton-inference-server-49586

- https://101empiretech.com/microsoft-is-now-making-its-own-arm-processors-for-ai-and-cloud-workloads-empire-tech/

- https://www.techspot.com/news/100864-microsoft-unveils-first-homegrown-chips-ai-cloud-workloads.html

If you want to know other articles similar to Unveiling the Truth: Does ChatGPT Run on Azure? you can visit the Blog category.

Leave a Reply

Related Post...